Lesson 5: Thread and Concurrency

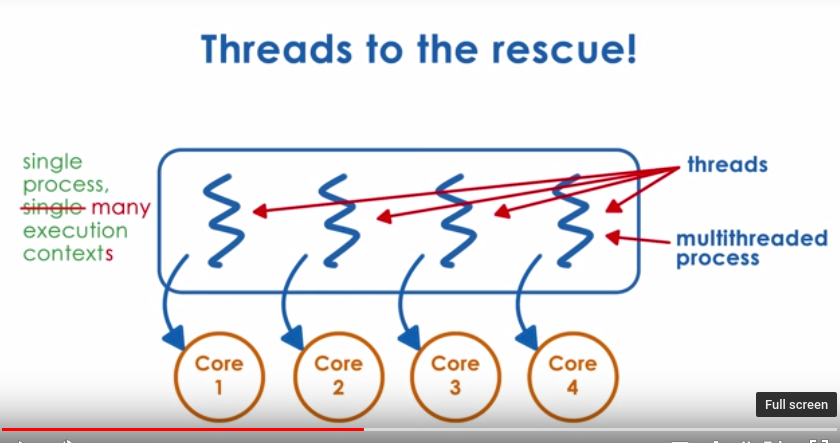

Single process with many execution contexts (--> multi thread)

Visual Metaphor

- A thread is like a worker in a toy shop:

- is an active entity

- Executing unit of toy order

- works simultaneously with other

- many workers completing toy orders

- requires coordination

- sharing of tools, parts, workstations

- is an active entity

- A thread is:

- is an active entity

- executing unit of a process

- works simultaneously with others

- many threads executing(where the concurrency applies )

- requires coordination

- sharing of I/O devices, CPUs, memory...

- is an active entity

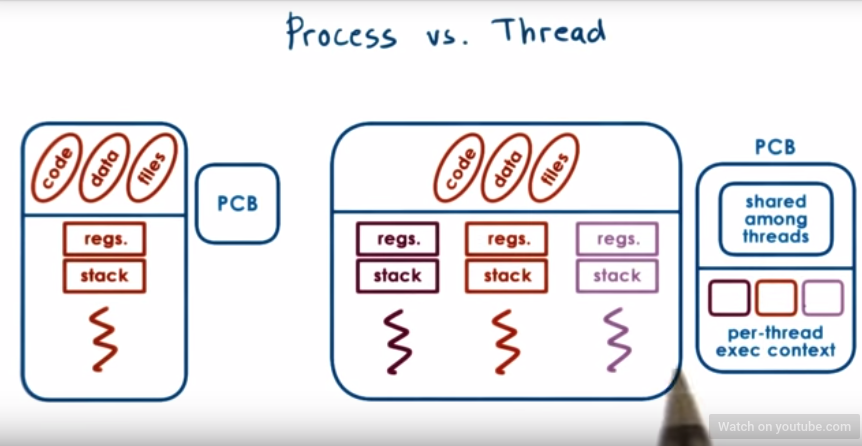

Process vs Thread

Benefits of Multithreading: Lower mem requirement + cheaper IPC

- palatalization --> speed up!

- Although threads executing the exact same code, they are not necessarily executing the exact same instruction at a single point in time!

- Such that every single one of them will need to have it's own private copy of the stack, register, program counter (PC).

Specialization --> distribute Priority to threads + Hot cache!!

- Threads may execute completely different portions of the program

- Priority : We can give higher priority to those threads that handle more important tasks/customers

- Performance is dependent on how much state can be present in the processor cache. each thread running on a different processor has access to its own processor cache==> HOTTER cache!

- Threads may execute completely different portions of the program

Why not just write a multi-process app?

- Memory Issue: Processes do not share an address space! (have to allocate for every single process with AS (address space) and EC(execution context)

- Memory space requirement is too large!

- Communication issue: passing data among processes or synchronizing among processes requires IPC (interprocess communitation) mechanisms that are more costly!

- Memory Issue: Processes do not share an address space! (have to allocate for every single process with AS (address space) and EC(execution context)

While a multi-thread implementation results in threads sharing one AS!

- Multi-thread is more memory efficient! (Mem issue solved!)

- Communication and synchronization among threads in a single process is performed via shared variable --> does not require the same kind of costly IPC! (MUCH lower overheads for inter thread communication than inter process alternatives)

Benefits of Multithreading for a Single CPU (or when #of threads > # of CPU):

- if the t_idle is sufficiently longer (longer than 2 times CS) than the time for it takes to make a context switch, Then it is reasonable to context switch from T1 to T2, have T2 do some operation, then switch back!

- if $$t{idle} > 2 \times t{context switch}$$ :

- then context switch to hide idling time

- $$t{ctxswitch threads} < t{ctxswitch processes}$$

- Hide Latency : The shorter the Time for Context switch, there will be more of these T_iddle situations when a thread is idling where it make sense to do a context switching to anothe thread

- This hide the wasted idling time! ==> Hide the latency!!!

- if $$t{idle} > 2 \times t{context switch}$$ :

- Although the above is true for both Process and threads:

- One of the most costly steps during context switch for Process is the time that required to create a new virtual to physical address mapping for the new process that will be scheduled.

- Thread share address space: --> the above costly step is avoided!

Benefits to app and OS

- by multithreading the OS kernel, allowed the OS to support multiple execution contexts, particularly useful when there are multiple CPUs! --> the OS context can execute concurrently on different CPUs in a multiprocessor or multicore platform

- OS - level services like deamons or drivers;

- Thread working on behalf of apps

Quiz

Threads Process Both

- T can share a virtual address space

- P take longer to context switch

- B have execution context

- T usually result in hotter caches when multiple exist

- B make use of some communication mechanisms

Basic Thread mechanism

What do we need to support threads?

- thread data structure

- identify threads, keep track of resource usage

- Mechanisms to create and manage threads

- mechanisms to safely coordinate among threads

- running concurrently in the same address space

- make sure don't overwrite each other's result

- need mechanisms that allow one thread to wait on results that should be produced by some other threads

- running concurrently in the same address space

Threads and concurrency:

First take a look at Processes:

- When processes run concurrently: they each operate within their own address space (AS)

The OS together with the underlying hardware will make sure no access to one AS is allowed to perform on memory that belongs another AS.

ex. let PhAddr-p1 X belong to VA-p1:

VA-p1 --> PAx [valid]

VA-p2 --> PAx [NOT valid!]

Now check out threads:

- When thread run concurrently : share the virtual --> physical mapping!

- T1 --> PAx [valid]

- T2 --> PAx [valid]

- Problems: if Both T1 and T2 are allowed to access the data at the same time and modify at the same time, this could end up with some inconsistencies!

- ex1. one trying to read the data while the other is modifying it ---> just read the garbage

- ex2. both Threads are trying to update the data at the same time ---> update overlap

This type of data race problem where multiple threads are accessing the same data at same time is common in multithread environments where thread execute concurrently.

To deal with this concurrency Issue:

Synchronization mechanisms. (focus on mutex and condition variable)

- Mutual Exclusion mechanism:

- exclusive access to only one thread at a time.

- Mutex

Waiting on other threads

- specific condition before proceeding

- ex. a thread dealing with shipment processing must wait on all the items in a certain order to be processed before that order can be shipped.

- condition variable to handle interthread coordination.

- specific condition before proceeding

Waking up other threads from wait state (discuss this in following lessons)

Threads and Threads Creation

Thread type:

- thread data structure: Thread type

- ThreadID

- PC (program counter)

- SP (stack pointer)

- registers,

- stack,

- attributes

- thread data structure: Thread type

Creation: Fork(proc, args) : proc: procedure that created thread will start executing; args: arguments for this procedure

- create a thread

- Not to be confused with UNIX fork for new process!

- ex. when Thread T0 calls a fork, a new thread T1 is created, --> a new thread data structure of this type is created and its fields are initialized such that its PC will point to first instruction of the procedure proc and these args will be available on this stack of the thread

- After fork, T1 and T0 can both execute concurrently

- T0 will execute the next operation after the fork call and T1 start executing first instruction in proc with the specified args.

- When T1 finished

- one programming practice would be to store the result of T1's computation in some well-defined location in AS that is accessible to all the threads. and they have some mechanism to notify either the parent or some other thread that the result is now available

- More generally, we needs some mechanism to determine a thread is done, and if necessary to retrieve its result or at least determine the status of the computation.

Join (thread): called by the parent thread

- terminate a thread:

- when the parent thread calls join with the thread ID of the child thread, it will be blocked until the child completes.

- Join will return to the parent the result of the child computation.

- Then at this point, the child exits the system, and any allocation data structures saved for the child will be freed.

- Notice: The parent that's joining the child in all other aspects, the parent and child thread are completely equivalent! they can all access all resources that are available to the process as a whole and share them! (True to both hardware resources, CPU mem, actual state within the process)

- terminate a thread:

Thread creation example:

the sequence of who finish first is not determined, both out come could be true; 4->6->null

4->6->null

6->4->null Both are possible! Because there is No Mutex!

Mutual Exclusion Mechanism: when both parent and child try to update the shared list at the same time.

Mutex: like a lock, should be used whenever accessing data or stayed that shared among thread.

- use the term: acquire the mutex/ lock to refer to this operation

- The unsuccessful threads will be blocked on the lock operatoin, until the lock holder released it.

- Mutex should at least contain information about is status, and also should contain owner, a list of blocked_thread.

Critical section code should correspond with any kind of operation that requires only one thread at a time performs this operation. (perform any type of operation that requires mutual execution between the threads.)

- ex. update the shared variable like the list

- ex. incrementing/decrementing counter

Other than critical section, the rest of the code in the program, the threads execution concurrently.

Birrell's Lock API: upon acquiring a mutex, a thread enters the locked block, and when it exiting the block (leaving the ending } ), the thread releases this mutex and frees the lock

Common Thread API: lock(m) and unlock(m);

Mutex example The result would then be 4 - > 6 - > nil

The result would then be 4 - > 6 - > nil

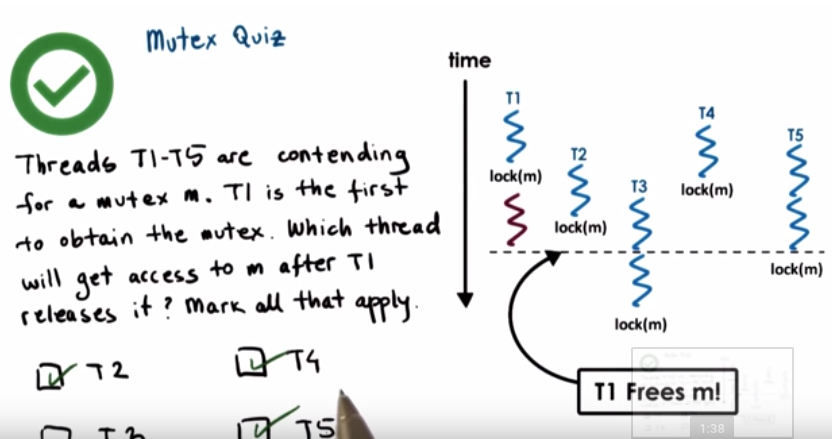

Mutex Quiz

who might get? check the viable candidates!

Producer/ Consumer example

if you wish to perform with mutual exclusion need to occur only under certain conditions pseducode for this example:The Consumer lock part is WASTEFUL!!!

pseducode for this example:The Consumer lock part is WASTEFUL!!!

A better approach is to use a condition variable!

Condition variable

- condition variable is suggest to be used in conjunction with Mutexes to control the behavior of concurrent threads.

The Modified version of the consumer and producer example: (Wait + signal)

- the mutex that was acquired when we got at wait, has to automatically released when we go into the wait statement, and automatically reacquired once we come out of the wait statement.

Condition variable API

- Condition type

- wait(mutex, condition)

- mutex is automatically released and reacquired on wait.

- Signal(condition)

- notify only one thread waiting on condition. (waking it up, one thread at a time)

- Broadcast(condition)

- notify all waiting threads. (waking all up!)

Condition variable data structure must have:

- mutex ref

- A list of waiting threads

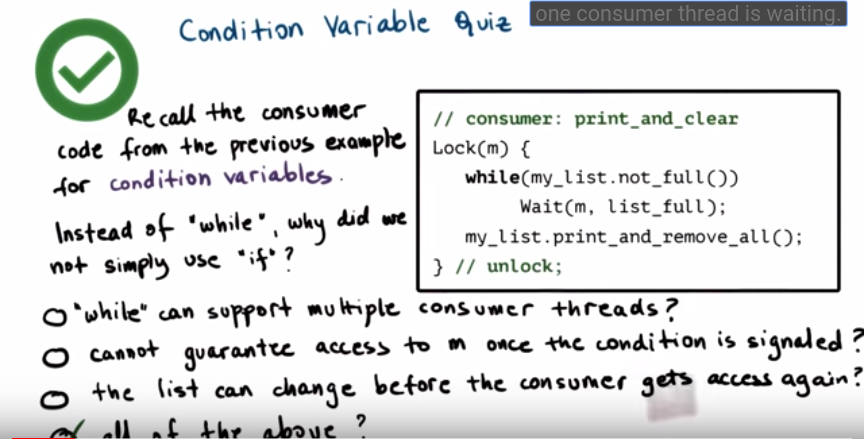

Quiz

All of the above! thus have to use WHILE!

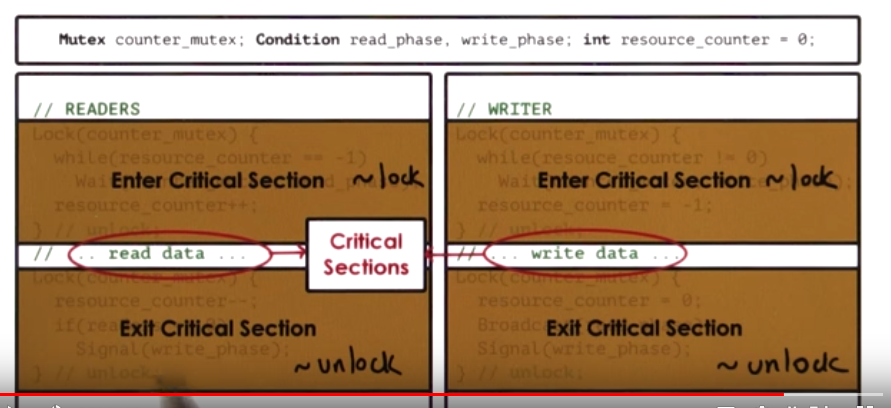

Readers/ writer problem

- some of the thread works as reader an access the shared state for reading

- 0 or more of the reader threads can access this resource concurrently.

- other set of thread works as writer that want to access that same shared variable, shared state and modify it

- only 0 or 1 writer thread can access the resource concurrently.

- State of share file/resources:

- free: resource.counter == 0;

- reading : resource.counter > 0;

- writing: resource.counter == -1

if(reader==0) should be if(reader.counter == 0 )

if(reader==0) should be if(reader.counter == 0 )

- the wait function wait(Mutex, wait-for-what); and while it's wait, the mutex is automatically released

- Signal(write_phase) will send signal to Wait(counter-mutex,write-phase) and let the writer wake up and do his things!

- next the writer will be removed from the wait queue.

- Broadcast(read_phase) will wake up all the queuing waiting read phase, one at a time.

- Yes, only one wake up at a time, but we do want multiple threads to be woken up so that multiple threads can ultimately reach the read phase.

- The sequence of Broadcast first then signal writer indicates there is a priority for readers.

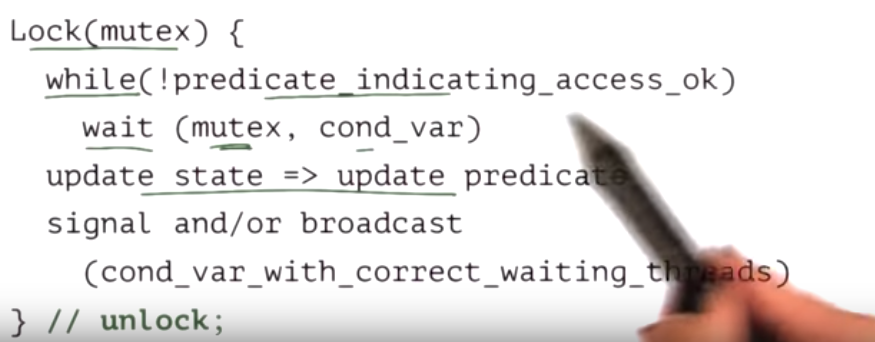

Typical Critical section Structure

Critical section structure with Proxy variable

Avoiding Common pitfalls(陷阱)

- keep track of mutex/cond variables used with a resource

- eg. mutex-type m1; // mutex for file1

- check that you are always(and correctly) using lock and unlock

- use a single mutex to access a single resource!

- check that you are signaling correct condition

- check that you are not using signal when broadcast is needed

- signal: only one thread will proceed, remaining threads will continue to wait

- ask yourself: do you need priority guarantees?

- threads execution order DO NOT controlled by signals to condition variables.

- Other pitfalls: spurious wake ups & dead locks!

Spurious/ unnecessary wake ups

Spurious wake ups == when we wake threads up knowing they may not be able to proceed.

- if(unlock after broadcast/signal) ==> no other thread can get lock.

the wake up is unnecessary! thread wait for read-phase ---> then wait for counter-mutex

- this will waste cycles by context switching these threads to run on the CPU then back again to wait on the wait queue.

How about always unlock the mutex before broadcast/signal?

- writer can!

- reader can NOT, (rely on the value of resource-counter!)

DeadLocks (one of the most scariest problem with multithreading)

DeadLock: Two or more competing threads are waiting on each other to complete, but none of them ever do. (so these thread get stuck!)

metaphor: two workers work on orders that involves a train, ,and each worker will need a soldering iron (烙铁) and a solder wire (焊丝) to finish their toy. The problem is there is only one of each of those!

A real world example:

- T1 and T2 both need two shared variable A and B: T1 first lock mutex for A and then lock the mutex for B; T2 first lock mutex for B and then lock the mutex for A. ==> T1 has m-A and T2 has m-B ==> Cycle!

How to avoid this?

- Sol 1: Unlock A before locking B ?==> fine-grained locking

- but threads need both A & B.

- Sol 2: Get all locks upfront, then, release at the end

- Use one MEGA lock

- for some app is OK

- Too restrictive , limits parallelism

- Sol 3: the best solution: Maintain lock order

- first m-A

- then m-B

- This will prevents cycles in wait graph! and ensure no deadlock!

Deadlock summary:

A cycle in the wait graph is necessary and sufficient for a deadlock to occur! (充要条件:cycle) ==> 算法题: Linked List Cycle

- edges from thread waiting on a resource to thread owning a resource.

What can we do about this?

- deadlock prevention: always maintain lock order --> EXPENSIVE

- deadlock detection & recovery 算法题: Linked List Cycle --> ROLLBACK, again expensive

- Apply the Ostrich Algorithm .... Do Nothing!

- If all else fails ... REBOOT.....

Quiz

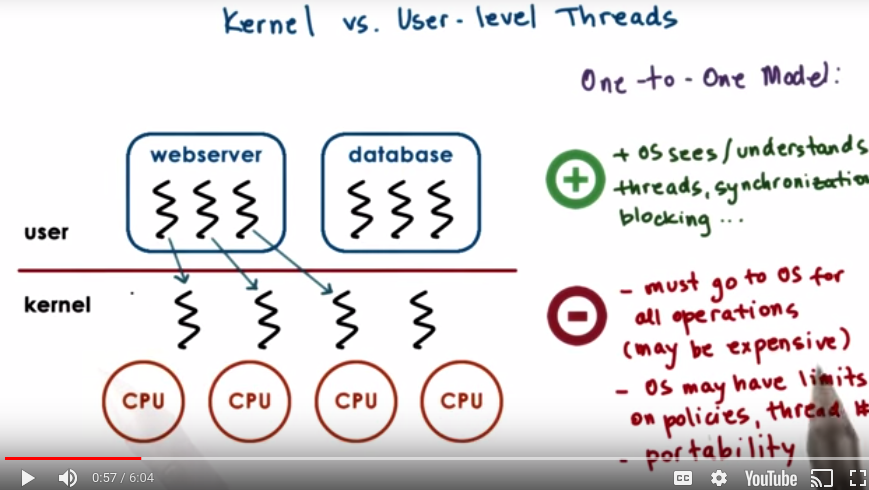

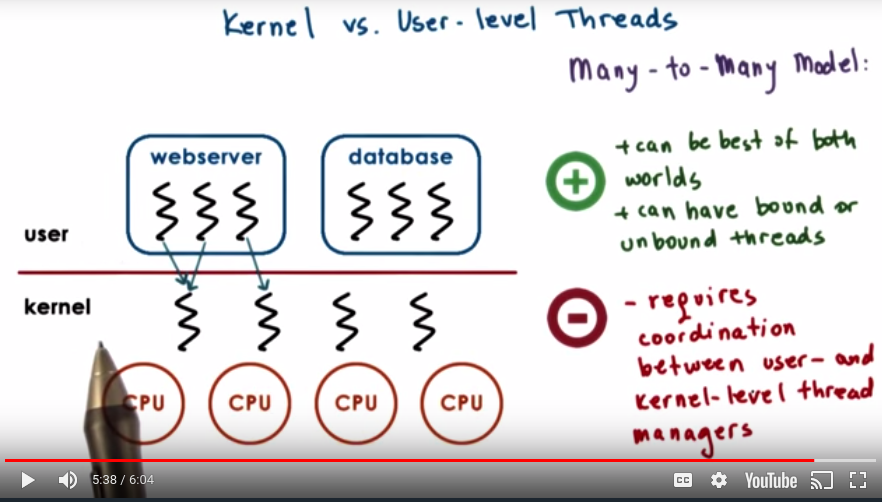

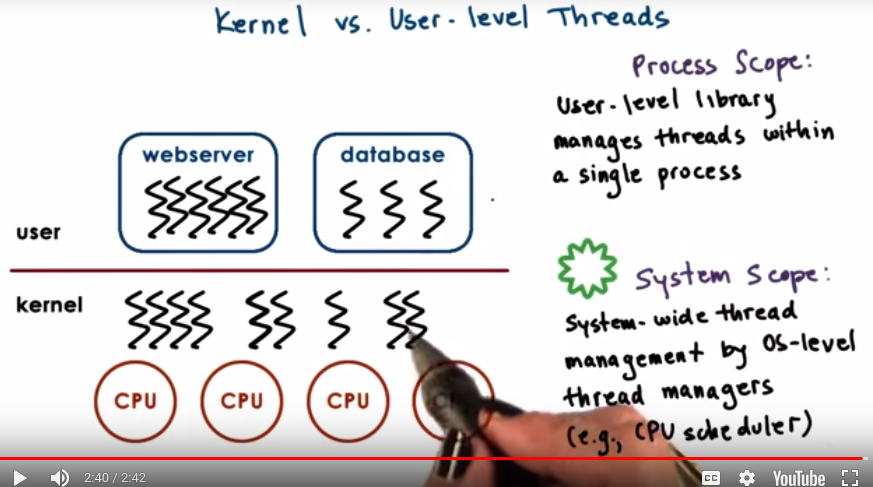

Kernel vs User-level Threads

one to one model:

many to one model:

many to many model

scope of multithreading

Multithread patterns

- Boss- worker

- Pipeline

- Layered

Example toy shop app:

- accept the order

- parse the order

- cut wooden parts

- paint and add decorations

- assemble the wooden toys

- ship the order

boss worker pattern

Two ways for boss to assign work:

- placing work in producer/consumer queue -----> tend to pick this one!!!

- regardless the downside, it still results in lower time per order that boss needs to spend, and result a better throughput of the system overall.

- directly signalling specific worker

How many workers?

- on demand (dynamically)

- A more common model: pool of workers.

- allow the poll to be dynamically increased in size: will create several threads whenever we determine that pool size needs to be adjusted! (rather than create one at the time for the on demand approach )

summary of boss- worker model:

- Boss-worker:

- Boss: assign work to workers

- Worker: performs entire task

- Boss-worker communication via producer/consumer queue

- worker pool : static or dynamic

- Pro: simplicity

- Cons:

- thread pool management (including synchronization for the shared buffer)

- ignores locality: boss doesn't keep track of what anyone of the workers are doing last.

- a worker just finish a similar/identical type of task, more likely, that particular worker will be more efficient at performing that exact same task in the future. Boss don't paying attention to what worker's doing, hard to make this optimization

Boss worker variants

- all workers created equal

- workers specialized for certain tasks (boss has a bit more work to distribute the task, while workers works more efficiently) --> better performance

- Pro: Better locality: Quality of Service management (state present in cache! hot cache!)

- Cons: more complicated to do the load balancing

Pipeline Pattern

Pipeline:

- Threads assigned one subtask in the system

- entire tasks == pipline of threads

- multiple tasks concurrently in the system, in different pipeline stages.

- throughput == weakest link ==> pipeline stage = thread poll

- Use shared-buffer based communication b/w stages ( the queue based approach)